Usd Survival Guide

This repository aims to be a practical onboarding guide to USD for software developers and pipeline TDs. For more information what makes this guide unique, see the motivation section.

This guide was officially introduced at Siggraph 2023 - Houdini Hive. Special thanks to SideFX for hosting me and throwing such a cool Houdini lounge and presentation line up!

Contributors

License

This guide is licensed under the Apache License 2.0. For more information as to how this affects copyright and distribution see our license page.

Prerequisites

Before starting our journey, make sure you are all packed:

- It is expected to have a general notion of what Usd is. If this is your first contact with the topic, check out the links in motivation. The introduction resources there take 1-2 hours to consume for a basic understanding and will help with understanding this guide.

- A background in VFX industry. Though not strictly necessary, it helps to know the general vocabulary of the industry to understand this guide. This guide aims to stay away from Usd specific vocabulary, where possible, to make it more accessible to the general VFX community.

- Motivation to learn new stuff. Don't worry to much about all the custom Usd terminology being thrown at you at the beginning, you'll pick it up it no time once you start working with Usd!

Next Steps

To get started, let's head over to the Core Elements section!

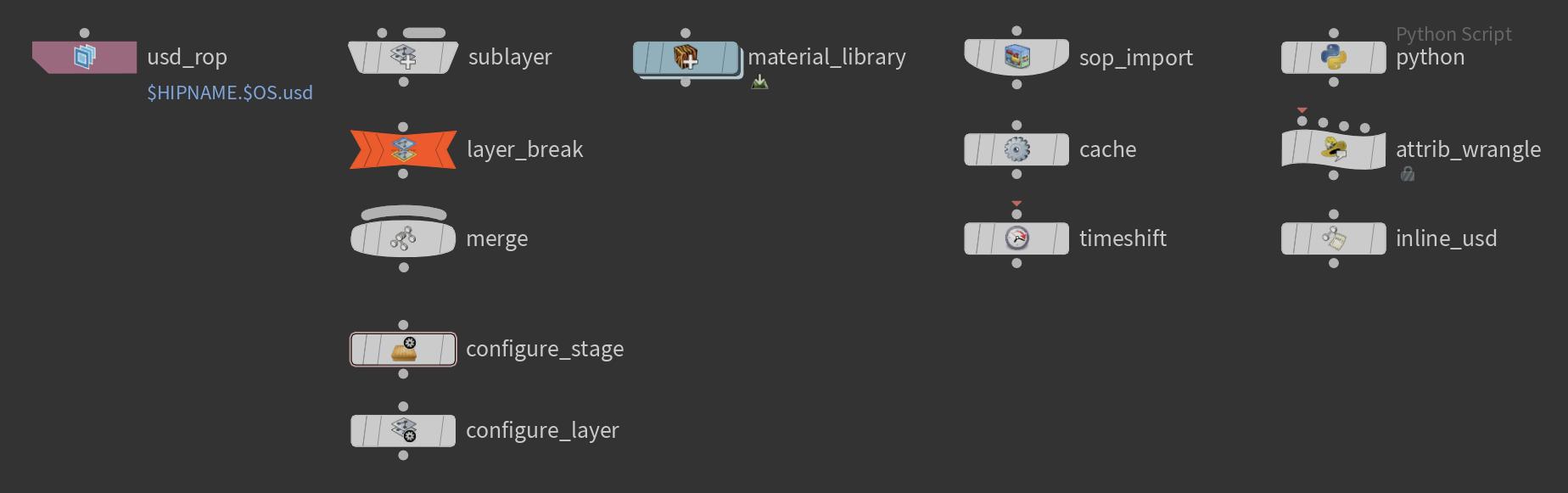

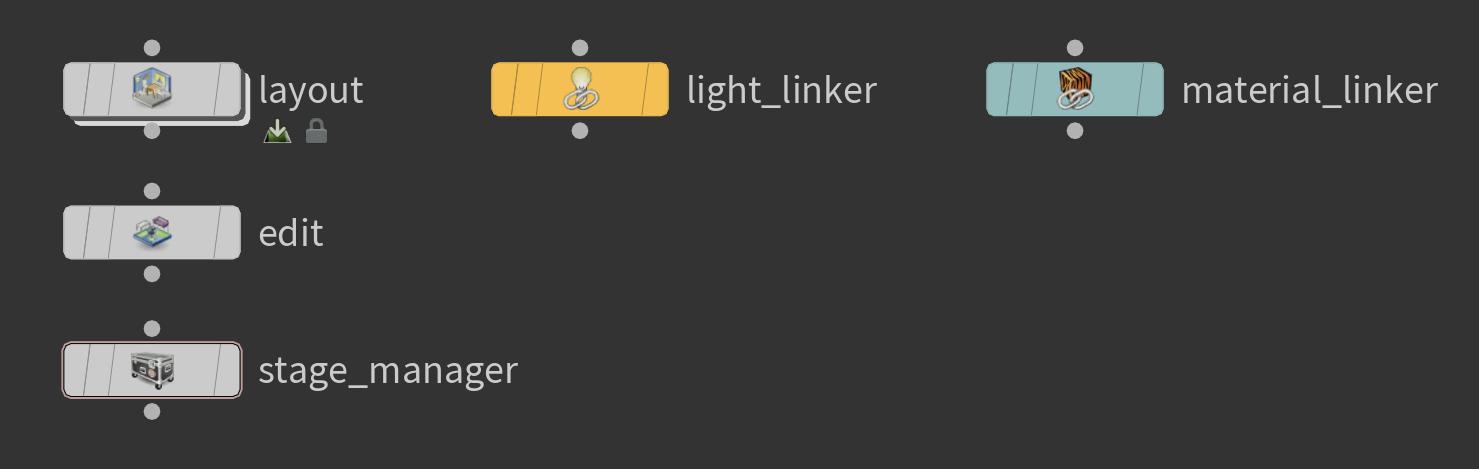

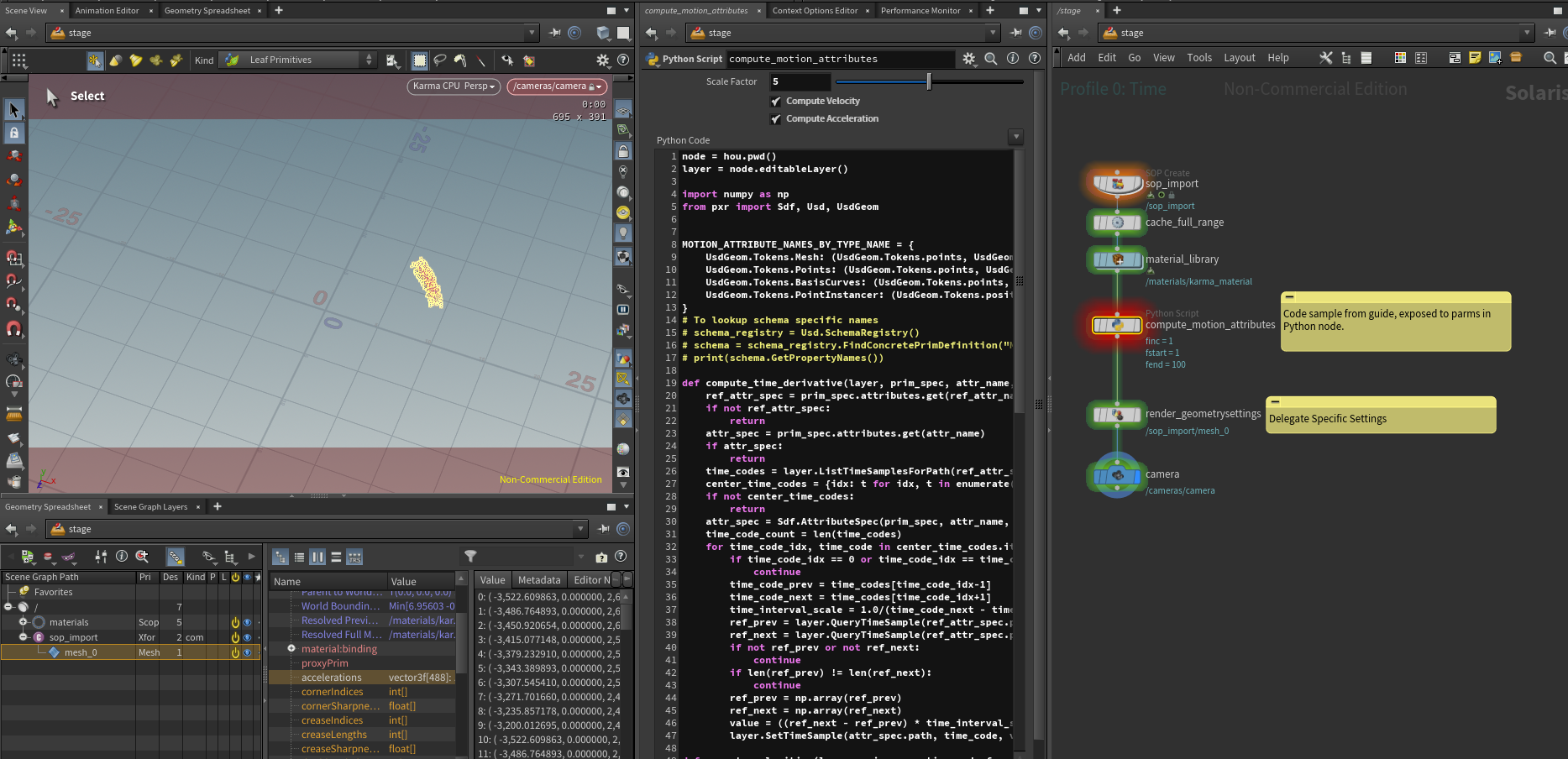

This guide primarily uses Houdini to explain concepts, as it is one of the most accessible and easiest ways to learn the ways of Usd with. You can install a non-commercial version for free from their website. It is highly recommended to use it when following this guide.

Motivation

As USD has been consistently increasing its market share in the recent years, it is definitely a technology to be aware of when working in any industry that uses 3d related data. It is becoming the de facto standard format on how different applications and vendors exchange their data.

You might be thinking:

Oh no another guide! Do we really need this?

This guide aims to solve the following 'niche':

- It aims to be an onboarding guide for software developers & pipeline developers so that you can hit the ground running.

- It aims to be practical as opposed to offering a high-level overview. This means you'll find a lot of code examples from actual production scenarios as well as a more hands on approach instead of overviews/terminology explanations. We'll often link to resources you can look into before a certain section to have a better understanding should vocabulary issues arise.

- It aims to soften the steep learning curve that some aspects of USD have by having a look at common production examples so you can have those sweet "aha, that's how it works" moments.

Basically think of it as a balance of links listed in the below resources section.

If this is your first time working with Usd, we recommend watching this 20 minute video from Apple:

Understand USD fundamentals (Frm WWDC 2022)

It covers the basic terminology in a very succinct manner.

Resources

We highly recommend also checking out the following resources:

- USD - Pixar

- USD - Interest Forum

- USD - Working Group

- USD - SideFX/Houdini

- Book Of USD - Remedy Entertainment

- USD CookBook - Colin Kennedy

- USD - Nvidia

- USD - Apple

At this point of the guide, we just want to state, that we didn't re-invent the wheel here: A big thank you to all the (open-source) projects/tutorials/guides that cover different aspects of Usd. You have been a big help in designing this guide as well as giving great insights. There is no one-to-rule them all documentation, so please consider contributing back to these projects if possible!

Contributing and Acknowledgements

Please consider contributing back to the Usd project in the official Usd Repository and via the Usd User groups.

Feel free to fork this repository and share further examples/improvements. If you run into issues, please flag them by submitting a ticket.

Contributors

Structure

On this page will talk about how this guide is structured and what the best approach to reading it is.

Table of Contents

Structure

Most of our sections follow this simple template:

- Table of Contents: Here we show the structure of the individual page, so we can jump to what we are interested in.

- TL;DR (Too Long; Didn't Read) - In-A-Nutshell: Here we give a short summary of the page, the most important stuff without all the details.

- What should I use it for?: Here we explain what relevance the page has in our day to day work with USD.

- Resources: Here we provide external supplementary reading material, often the USD API docs or USD glossary.

- Overview: Here we cover the individual topic in broad strokes, so you get the idea of what it is about.

This guide uses Houdini as its "backbone" for exploring concepts, as it is one of the easiest ways to get started with USD.

You can grab free-for-private use copy of Houdini via the SideFX website. SideFX is the software development company behind the Houdini.

Almost all demos we show are from within Houdini, although you can also save the output of all our code snippets to a .usd file and view it in USD view or USD manager by calling stage.Export("/file/path.usd")/layer.Export("/file/path.usd")

You can find all of our example files in our Usd Survival Guide - GitHub Repository as well in our supplementary Usd Asset Resolver - GitHub Repository. Among these files are Houdini .hip scenes, Python snippets and a bit of C++/CMake code.

We also indicate important to know tips with stylized blocks, these come in the form of:

We often provide "Pro Tips" that give you pointers how to best approach advanced topics.

Danger blocks warn you about common pitfalls or short comings of USD and how to best workaround them.

Collapsible Block | Click me to show my content!

For longer code snippets, we often collapse the code block to maintain site readability.

print("Hello world!")

Learning Path

We recommend working through the guide from start to finish in chronological order. While we can do it in a random order, especially our Basic Building Blocks of Usd and Composition build on each other and should therefore be done in order.

To give you are fair warning though, we do deep dive a bit in the beginning, so just make sure you get the gist of it and then come back later when you feel like you need a refresher or deep dive on a specific feature.

How To Run Our Code Examples

We also have code blocks, where if you hover other them, you can copy the content to you clipboard by pressing the copy icon on the right. Most of our code examples are "containered", meaning they can run by themselves.

This does come with a bit of the same boiler plate code per example. The big benefit though is that we can just copy and run them and don't have to initialize our environment.

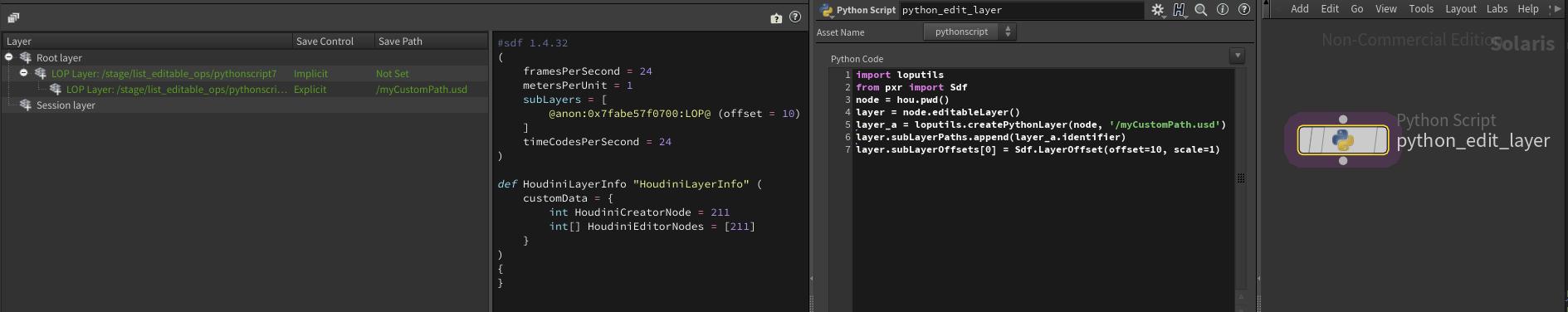

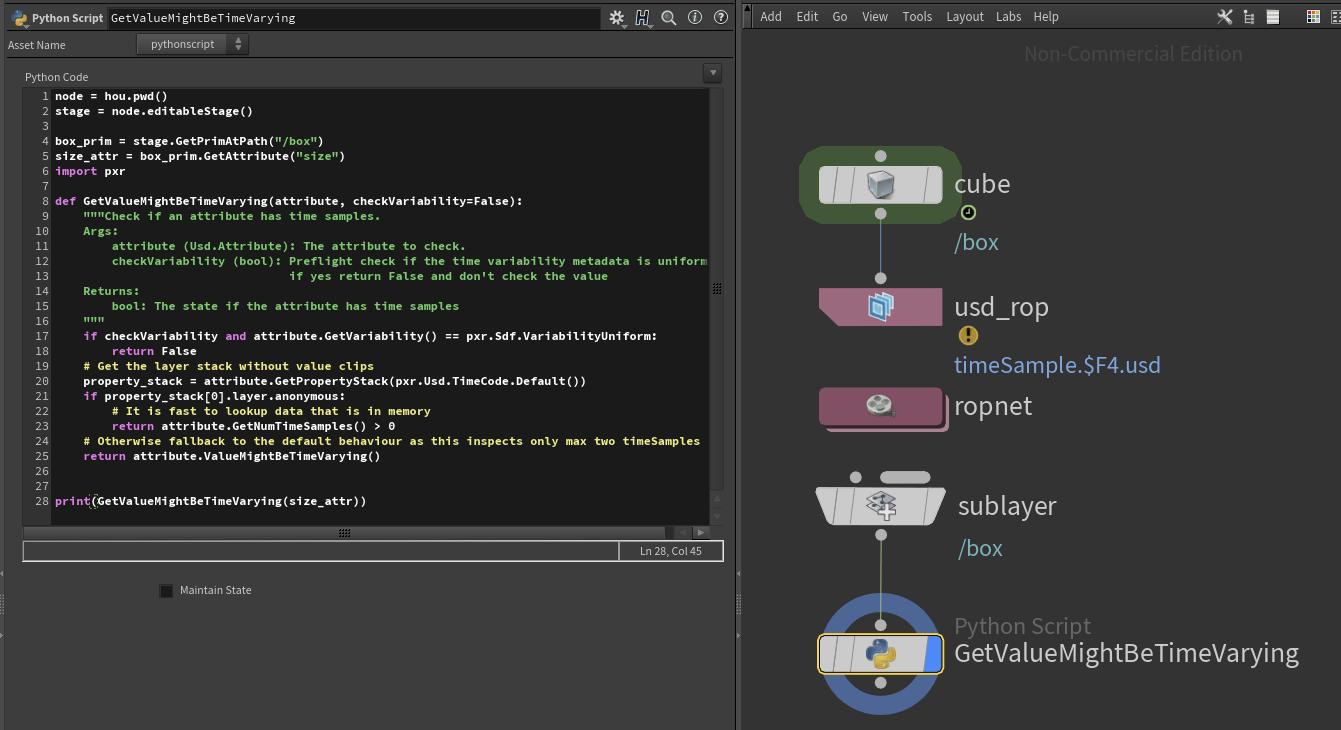

Most snippets create in memory stages or layers. If we want to use the snippets in a Houdini Python LOP, we have to replace the stage/layer access as follows:

In Houdini we can't call hou.pwd().editableStage() and hou.pwd().editableLayer() in the same Python LOP node.

Therefore, when running our high vs low level API examples, make sure you are using two different Python LOP nodes.

from pxr import Sdf, Usd

## Stages

# Native USD

stage = Usd.Stage.CreateInMemory()

# Houdini Python LOP

stage = hou.pwd().editableStage()

## Layers

# Native USD

layer = Sdf.Layer.CreateAnonymous()

# Houdini Python LOP

layer = hou.pwd().editableLayer()

Documentation

If you want to locally build this documentation, you'll have to download mdBook, mdBook-admonish and mdBook-mermaid and add their parent directories to the PATHenv variable so that the executables are found.

You can do this via bash (after running source setup.sh):

export MDBOOK_VERSION="0.4.28"

export MDBOOK_ADMONISH_VERSION="1.9.0"

export MDBOOK_MERMAID_VERSION="0.12.6"

export MDBOOK_SITEMAP_VERSION="0.1.0"

curl -L https://github.com/rust-lang/mdBook/releases/download/v$MDBOOK_VERSION/mdbook-v$MDBOOK_VERSION-x86_64-unknown-linux-gnu.tar.gz | tar xz -C ${REPO_ROOT}/tools

curl -L https://github.com/tommilligan/mdbook-admonish/releases/download/v$MDBOOK_ADMONISH_VERSION/mdbook-admonish-v$MDBOOK_ADMONISH_VERSION-x86_64-unknown-linux-gnu.tar.gz | tar xz -C ${REPO_ROOT}/tools

curl -L https://github.com/badboy/mdbook-mermaid/releases/download/v$MDBOOK_MERMAID_VERSION/mdbook-mermaid-v$MDBOOK_MERMAID_VERSION-x86_64-unknown-linux-gnu.tar.gz | tar xz -C ~/tools

export PATH=${REPO_ROOT}/tools:$PATH

You then can just run the following to build the documentation in html format:

./docs.sh

The documentation will then be built in docs/book.

Future Development

We do not cover the following topics yet:

- Cameras

- Render related schemas

- USD C++

- OCIO

- Render Procedurals

USD Essentials

In our essentials section, we cover the basics of USD from a software developer perspective.

That means we go over the most common base classes we'll interacting with in our day-to-day work.

Our approach is to start by looking at the smallest elements in USD and increasing complexity until we talk about how different broader aspects/concepts of USD work together.

That does mean we do not provide a high level overview. As mentioned in our motivation section, this guide is conceptualized to be an onboarding guide for developers. We therefore take a very code heavy and deep dive approach from the get-go.

Don't be scared though! We try to stay as "generic" as possible, avoiding USD's own terminology where possible.

Are you ready to dive into the wonderful world of USD? Then let's get started!

API Overview

Before we dive into the nitty gritty details, let's first have a look at how the USD API is structured.

Overall there are two different API "levels":

flowchart TD

pxr([pxr]) --> highlevel([High Level -> Usd])

pxr --> lowlevel([Low Level -> PcP/Sdf])

Most tutorials focus primarily on the high level API, as it is a bit more convenient when starting out. The more you work in Usd though, the more you'll start using the lower level API. Therefore this guide will often have examples for both levels.

We'd actually recommend starting out with the lower level API as soon as you can, as it will force you to write performant code from the start.

Now there are also a few other base modules that supplement these two API levels, we also have contact with them in this guide:

- Gf: The Graphics Foundations module provides all math related classes and utility functions (E.g matrix and vector data classes)

- Vt : The Value Types module provides the value types for what USD can store. Among these is also the

Vt.Array, which allows us to efficiently map USD arrays (of various data types like int/float/vectors) to numpy arrays for fast data processing. - Plug: This module manages USD's plugin framework.

- Tf: The Tools Foundations module gives us access to profiling, debugging and C++ utilities (Python/Threading). It also houses our type registry (for a variety of USD classes).

For a full overview, visit the excellently written USD Architecture Overview - Official API Docs section.

TL;DR - API Overview In-A-Nutshell

Here is the TL;DR version. Usd is made up of two main APIs:

- High level API:

- Low level API:

Individual components of Usd are loaded via a plugin based system, for example Hydra, kinds, file plugins (Vdbs, abc) etc.

Here is a simple comparison:

### High Level ### (Notice how we still use elements of the low level API)

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

attr = prim.CreateAttribute("tire:size", Sdf.ValueTypeNames.Float)

attr.Set(10)

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Xform"

attr_spec = Sdf.AttributeSpec(prim_spec, "tire:size", Sdf.ValueTypeNames.Float)

attr_spec.default = 10

What should I use it for?

You'll be using these two API levels all the time when working with Usd. The high level API is often used with read ops, the low level with write ops or inspecting the underlying caches of the high level API.

Resources

When should I use what?

As a rule of thumb, you use the high level API when:

- Reading data of a stage

- Using Usd Schema Classes (E.g. UsdGeomMesh, UsdClipsAPI, UsdGeomPrimvarsAPI)

And the low level API when:

- Creating/Copying/Moving data of a layer

- Performance is critical (When is it ever not?)

High Level API

The Usd Core API docs page is a great place to get an overview over the high level API:

Basically everything in the pxr.Usd namespace nicely wraps things in the pxr.Sdf/pxr.Pcp namespace with getters/setters, convenience classes and functions.

Therefore it is a bit more OOP oriented and follows C++ code design patterns.

This level always operates on the composed state of the stage. This means as soon as you are working stages, you'll be using the higher level API. It also takes care of validation data/setting common data, whereas the lower level API often leaves parts up to the user.

Low Level API

Great entry points for the lower level API:

This level always operates individual layers. You won't have access to the stage aka composed view of layers.

Workflows

The typical workflow is to do all read/query operations in the high level API by creating/accessing a stage and then to do all the write operations in the low level API.

In DCCs, the data creation is done by the software, after that it is your job to massage the data to its final form based on what your pipeline needs:

In the daily business, you'll be doing this 90% of the time:

- Rename/Remove prims

- Create additional properties/attributes/relationships

- Add metadata

Sounds simple, right? Ehm right??

Well yes and no. This guide tries to give you good pointers of common pitfalls you might run into.

So let's get started with specifics!

Elements

In this sub-section we have a look at the basic building blocks of Usd.

Our approach is incrementally going from the smallest building blocks to the larger ones (except for metadata we squeeze that in later as a deep dive), so the recommended order to work through is as follows:

- Paths

- Data Containers (Prims & Properties)

- Data Types

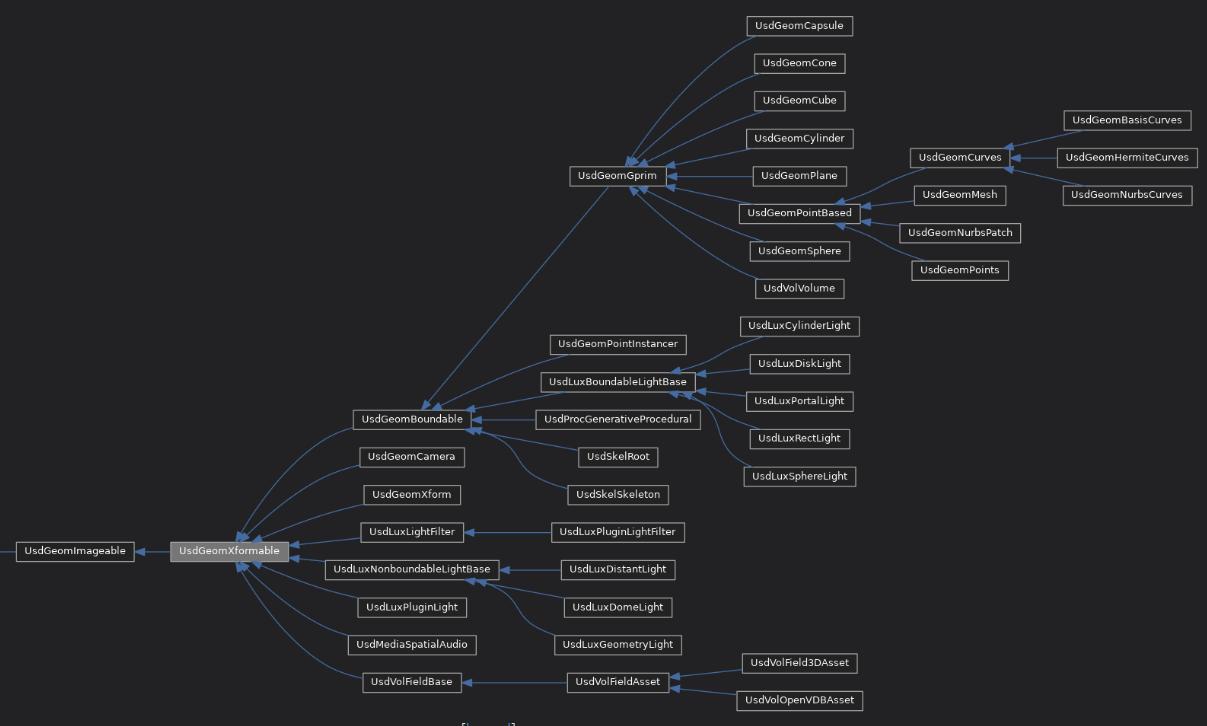

- Schemas ('Classes' in OOP terminology)

- Metadata

- Layers & Stages (Containers of actual data)

- Traversing/Loading Data (Purpose/Visibility/Activation/Population)

- Animation/Time Varying Data

- Materials

- Transforms

- Collections

- Notices/Event Listeners

- Standalone Utilities

This will introduce you to the core classes you'll be using the most and then increasing the complexity step by step to see how they work together with the rest of the API.

We try to stay terminology agnostic as much as we can, but some vocab you just have to learn to use USd. We compiled a small cheat sheet here, that can assist you with all those weird Usd words.

Getting down the basic building blocks down is crucial, so take your time! In the current state the examples are a bit "dry", we'll try to make it more entertaining in the future.

Get yourself comfortable and let's get ready to roll! You'll master the principles of Usd in no time!

Paths

As Usd is a hierarchy based format, one of its core functions is handling paths. In order to do this, Usd provides the pxr.Sdf.Path class. You'll be using quite a bunch, so that's why we want to familiarize ourselves with it first.

pxr.Sdf.Path("/My/Example/Path")

Table of Contents

TL;DR - Paths In-A-Nutshell

Here is the TL;DR version: Paths can encode the following path data:

Prim: "/set/bicycle" - Separator/Property:Attribute: "/set/bicycle.size" - Separator.Relationship: "/set.bikes[/path/to/target/prim]" - Separator./ Targets[](Prim to prim target paths e.g. collections of prim paths)

Variants("/set/bicycle{style=blue}wheel.size")

from pxr import Sdf

# Prims

prim_path = Sdf.Path("/set/bicycle")

prim_path_str = Sdf.Path("/set/bicycle").pathString # Returns the Python str "/set/bicycle"

# Properties (Attribute/Relationship)

property_path = Sdf.Path("/set/bicycle.size")

property_with_namespace_path = Sdf.Path("/set/bicycle.tire:size")

# Relationship targets

prim_rel_target_path = Sdf.Path("/set.bikes[/set/bicycles]") # Prim to prim linking (E.g. path collections)

# Variants

variant_path = prim_path.AppendVariantSelection("style", "blue") # Returns: Sdf.Path('/set/bicycle{style=blue}')

variant_path = Sdf.Path('/set/bicycle{style=blue}frame/screws')

What should I use it for?

Anything that is path related in your hierarchy, use Sdf.Path objects. It will make your life a lot easier than if you were to use strings.

Resources

Overview

Each element in the path between the "/" symbol is a prim similar to how on disk file paths mark a folder or a file.

Most Usd API calls that expect Sdf.Path objects implicitly take Python strings as well, we'd recommend using Sdf.Paths from the start though, as it is faster and more convenient.

We recommend going through these small examples (5-10 min), just to get used to the Path class.

Creating a path & string representation

from pxr import Sdf

path = Sdf.Path("/set/bicycle")

path_name = path.name # Similar to os.path.basename(), returns the last element of the path

path_empty = path.isEmpty # Property to check if path is empty

# Path validation (E.g. for user created paths)

Sdf.Path.IsValidPathString("/some/_wrong!_/path") # Returns: (False, 'Error Message')

# Join paths (Similar to os.path.join())

path = Sdf.Path("/set/bicycle")

path.AppendPath(Sdf.Path("frame/screws")) # Returns: Sdf.Path("/set/bicycle/frame/screws")

# Manually join individual path elements

path = Sdf.Path(Sdf.Path.childDelimiter.join(["set", "bicycle"]))

# Get the parent path

parent_path = path.GetParentPath() # Returns Sdf.Path("/set")

parent_path.IsRootPrimPath() # Returns: True (Root prims are prims that only

# have a single '/')

ancestor_range = path.GetAncestorsRange() # Returns an iterator for the parent paths, the same as recursively calling GetParentPath()

# Add child path

child_path = path.AppendChild("wheel") # Returns: Sdf.Path("/set/bicycle/wheel")

# Check if path is a prim path (and not a attribute/relationship path)

path.IsPrimPath() # Returns: True

# Check if path starts with another path

# Important: It actually compares individual path elements (So it is not a str.startswith())

Sdf.Path("/set/cityA/bicycle").HasPrefix(Sdf.Path("/set")) # Returns: True

Sdf.Path("/set/cityA/bicycle").HasPrefix(Sdf.Path("/set/city")) # Returns: False

Sdf.Path("/set/bicycle").GetCommonPrefix(Sdf.Path("/set")) # Returns: Sdf.Path("/set")

# Relative/Absolute paths

path = Sdf.Path("/set/cityA/bicycle")

rel_path = path.MakeRelativePath("/set") # Returns: Sdf.Path('cityA/bicycle')

abs_path = rel_path.MakeAbsolutePath("/set") # Returns: Sdf.Path('/set/cityA/bicycle')

abs_path.IsAbsolutePath() # Returns: True -> Checks path[0] == "/"

# Do not use this is performance critical loops

# See for more info: https://openusd.org/release/api/_usd__page__best_practices.html

# This gives you a standard python string

path_str = path.pathString

Special Paths: emptyPath & absoluteRootPath

from pxr import Sdf

# Shortcut for Sdf.Path("/")

root_path = Sdf.Path.absoluteRootPath

root_path == Sdf.Path("/") # Returns: True

# We'll cover in a later section how to rename/remove things in Usd,

# so don't worry about the details how this works yet. Just remember that

# an emptyPath exists and that its usage is to remove something.

src_path = Sdf.Path("/set/bicycle")

dst_path = Sdf.Path.emptyPath

edit = Sdf.BatchNamespaceEdit()

edit.Add(src_path, dst_path)

Variants

We can also encode variants into the path via the {variantSetName=variantName} syntax.

# Variants (see the next sections) are also encoded

# in the path via the "{variantSetName=variantName}" syntax.

from pxr import Sdf

path = Sdf.Path("/set/bicycle")

variant_path = path.AppendVariantSelection("style", "blue") # Returns: Sdf.Path('/set/bicycle{style=blue}')

variant_path = Sdf.Path('/set/bicycle{style=blue}frame/screws')

# Property path to prim path with variants

property_path = Sdf.Path('/set/bicycle{style=blue}frame/screws.size')

variant_path = property_path.GetPrimOrPrimVariantSelectionPath() # Returns: Sdf.Path('/set/bicycle{style=blue}frame/screws')

# Typical iteration example:

variant_path = Sdf.Path('/set/bicycle{style=blue}frame/screws')

if variant_path.ContainsPrimVariantSelection(): # Returns: True # For any variant selection in the whole path

for parent_path in variant_path.GetAncestorsRange():

if parent_path.IsPrimVariantSelectionPath():

print(parent_path.GetVariantSelection()) # Returns: ('style', 'blue')

# When authoring relationships, we usually want to remove all variant encodings in the path:

variant_path = Sdf.Path('/set/bicycle{style=blue}frame/screws')

prim_rel_target_path = variant_path.StripAllVariantSelections() # Returns: Sdf.Path('/set/bicycle/frame/screws')

Properties

Paths can also encode properties (more about what these are in the next section).

Notice that attributes and relationships are both encoded with the "." prefix, hence the name property is used to describe them both.

When using Usd, we'll rarely run into the relationship [] encoded targets paths. Instead we use the Usd.Relationship/Sdf.RelationshipSpec methods to set the path connections. Therefore it is just good to know they exist.

# Properties (see the next section) are also encoded

# in the path via the "." (Sdf.Path.propertyDelimiter) token

from pxr import Sdf

path = Sdf.Path("/set/bicycle.size")

property_name = path.name # Be aware, this will return 'size' (last element)

# Append property to prim path

Sdf.Path("/set/bicycle").AppendProperty("size") # Returns: Sdf.Path("/set/bicycle.size")

# Properties can also be namespaced with ":" (Sdf.Path.namespaceDelimiter)

path = Sdf.Path("/set/bicycle.tire:size")

property_name = path.name # Returns: 'tire:size'

property_name = path.ReplaceName("color") # Returns: Sdf.Path("/set/bicycle.color")

# Check if path is a property path (and not a prim path)

path.IsPropertyPath() # Returns: True

# Check if path is a property path (and not a prim path)

Sdf.Path("/set/bicycle.tire:size").IsPrimPropertyPath() # Returns: True

Sdf.Path("/set/bicycle").IsPrimPropertyPath() # Returns: False

# Convenience methods

path = Sdf.Path("/set/bicycle").AppendProperty(Sdf.Path.JoinIdentifier(["tire", "size"]))

namespaced_elements = Sdf.Path.TokenizeIdentifier("tire:size") # Returns: ["tire", "size"]

last_element = Sdf.Path.StripNamespace("/set/bicycle.tire:size") # Returns: 'size'

# With GetPrimPath we can strip away all property encodings

path = Sdf.Path("/set/bicycle.tire:size")

prim_path = path.GetPrimPath() # Returns: Sdf.Path('/set/bicycle')

# We can't actually differentiate between a attribute and relationship based on the property path.

# Hence the "Property" terminology.

# In practice we rarely use/see this as this is a pretty low level API use case.

# The only 'common' case, where you will see this is when calling the Sdf.Layer.Traverse function.

# To encode prim relation targets, we can use:

prim_rel_target_path = Sdf.Path("/set.bikes[/set/bicycle]")

prim_rel_target_path.IsTargetPath() # Returns: True

prim_rel_target_path = Sdf.Path("/set.bikes").AppendTarget("/set/bicycle")

# We can also encode check if a path is a relational attribute.

# ToDo: I've not seen this encoding being used anywhere so far.

# "Normal" attr_spec.connectionsPathList connections as used in shaders

# are encoded via Sdf.Path("/set.bikes[/set/bicycle.someOtherAttr]")

attribute_rel_target_path = Sdf.Path("/set.bikes[/set/bicycles].size")

attribute_rel_target_path.IsRelationalAttributePath() # Returns: True

Data Containers (Prims & Properties)

For Usd to store data at the paths, we need a data container.

To fill this need, Usd has the concept of prims.

Prims can own properties, which can either be attributes or relationships. These store all the data that can be consumed by clients. Prims are added to layers which are then written to on disk Usd files.

In the high level API it looks as follows:

flowchart LR

prim([Usd.Prim]) --> property([Usd.Property])

property --> attribute([Usd.Attribute])

property --> relationship([Usd.Relationship])

In the low level API:

flowchart LR

prim([Sdf.PrimSpec]) --> property([Sdf.PropertySpec])

property --> attribute([Sdf.AttributeSpec])

property --> relationship([Sdf.RelationshipSpec])

Structure

Large parts of the (high level and parts of the low level) API follow this pattern:

- <ContainerType>.Has<Name>() or <ContainerType>.Is<Name>()

- <ContainerType>.Create<Name>()

- <ContainerType>.Get<Name>()

- <ContainerType>.Set<Name>()

- <ContainerType>.Clear<Name>() or .Remove<Name>()

The high level API also has the extra destinction of <ContainerType>.HasAuthored<Name>() vs .Has<Name>().

HasAuthored only returns explicitly defined values, where Has is allowed to return schema fallbacks.

The low level API only has the explicitly defined values, as does not operate on the composed state and is therefore not aware of schemas (at least when it comes to looking up values).

Let's do a little thought experiment:

- If we were to compare Usd to .json files, each layer would be a .json file, where the nested key hierarchy is the Sdf.Path. Each path would then have standard direct key/value pairs like

typeName/specifierthat define metadata as well as theattributesandrelationshipskeys which carry dicts with data about custom properties. - If we would then write an API for the .json files, our low level API would directly edit the keys. This is what the Sdf API does via

Sdf.PrimSpec/Sdf.PropertySpec/Sdf.AttributeSpec/Sdf.RelationshipSpecclasses. These are very small wrappers that set the keys more or less directly. They are still wrappers though. - To make our lives easier, we would also create a high level API, that would call into the low level API. The high level API would then be a public API, so that if we decide to change the low-level API, the high level API still works. Usd does this via the

Usd.Prim/Usd.Property/Usd.Attribute/Usd.Relationshipclasses. These classes provide OOP patterns like Getter/Setters as well as common methods to manipulate the underlying data.

This is in very simplified terms how the Usd API works in terms of data storage.

Table of Contents

TL;DR - Data Containers (Prims/Properties/Attributes/Relationships) In-A-Nutshell

- In order to store data at our

Sdf.Paths, we need data containers. Usd therefore has the concept ofUsd.Prims/Sdf.PrimSpecs, which can holdUsd.Propertyies/Sdf.PropertySpecs - To distinguish between data and data relations,

Usd.Propertyies are separated in:Usd.Attributes/Sdf.AttributeSpecs: These store data of different types (float/ints/arrays/etc.)UsdGeom.Primvars: These are the same as attributes with extra features:- They are created the same way as attributes, except they use the

primvars.<myAttributeName>namespace. - They get inherited down the hierarchy if they are of constant interpolation (They don't vary per point/vertex/prim).

- They are exported to Hydra (Usd's render scene description abstraction API), so you can use them for materials/render settings/etc.

- They are created the same way as attributes, except they use the

Usd.Relationships/Sdf.RelationshipSpecs: These store mapping from prim to prim(s) or attribute to attribute.

What should I use it for?

In production, these are the classes you'll have the most contact with. They handle data creation/storage/modification. They are the heart of what makes Usd be Usd.

Resources

- Usd.Prim

- Usd.Property

- Usd.Attribute

- Usd.Relationship

- Sdf.PrimSpec

- Sdf.PropertySpec

- Sdf.AttributeSpec

- Sdf.RelationshipSpec

Overview

We cover the details for prims and properties in their own sections as they are big enough topics on their own:

Prims

For an overview and summary please see the parent Data Containers section.

Table of Contents

- Prim Basics

- Hierarchy (Parent/Child)

- Schemas

- Composition

- Loading Data (Activation/Visibility)

- Properties (Attributes/Relationships)

Overview

The main purpose of a prim is to define and store properties. The prim class itself only stores very little data:

- Path/Name

- A connection to its properties

- Metadata related to composition and schemas as well as core metadata(specifier, typeName, kind,activation, assetInfo, customData)

This page covers the data on the prim itself, for properties check out this section.

The below examples demonstrate the difference between the higher and lower level API where possible. Some aspects of prims are only available via the high level API, as it acts on composition/stage related aspects.

There is a lot of code duplication in the below examples, so that each example works by itself. In practice editing data is very concise and simple to read, so don't get overwhelmed by all the examples.

Prim Basics

Setting core metadata via the high level is not all exposed on the prim itself via getters/setters, instead the getters/setters come in part from schemas or schema APIs. For example setting the kind is done via the Usd.ModelAPI.

High Level

from pxr import Kind, Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/cube")

prim = stage.DefinePrim(prim_path, "Xform") # Here defining the prim uses a `Sdf.SpecifierDef` define op by default.

# The specifier and type name is something you'll usually always set.

prim.SetSpecifier(Sdf.SpecifierOver)

prim.SetTypeName("Cube")

# The other core specs are set via schema APIs, for example:

model_API = Usd.ModelAPI(prim)

if not model_API.GetKind():

model_API.SetKind(Kind.Tokens.group)

We are also a few "shortcuts" that check specifiers/kinds (.IsAbstract, .IsDefined, .IsGroup, .IsModel), more about these in the kind section below.

Low Level

The Python lower level Sdf.PrimSpec offers quick access to setting common core metadata via standard class instance attributes:

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/cube")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path) # Here defining the prim uses a `Sdf.SpecifierOver` define op by default.

# The specifier and type name is something you'll usually always set.

prim_spec.specifier = Sdf.SpecifierDef # Or Sdf.SpecifierOver/Sdf.SpecifierClass

prim_spec.typeName = "Cube"

prim_spec.active = True # There is also a prim_spec.ClearActive() shortcut for removing active metadata

prim_spec.kind = "group" # There is also a prim_spec.ClearKind() shortcut for removing kind metadata

prim_spec.instanceable = False # There is also a prim_spec.ClearInstanceable() shortcut for removing instanceable metadata.

prim_spec.hidden = False # A hint for UI apps to hide the spec for viewers

# You can also set them via the standard metadata commands:

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/cube")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

# The specifier and type name is something you'll usually always set.

prim_spec.SetInfo(prim_spec.SpecifierKey, Sdf.SpecifierDef) # Or Sdf.SpecifierOver/Sdf.SpecifierClass

prim_spec.SetInfo(prim_spec.TypeNameKey, "Cube")

# These are some other common specs:

prim_spec.SetInfo(prim_spec.ActiveKey, True)

prim_spec.SetInfo(prim_spec.KindKey, "group")

prim_spec.SetInfo("instanceable", False)

prim_spec.SetInfo(prim_spec.HiddenKey, False)

We will look at specifics in the below examples, so don't worry if you didn't understand everything just yet :)

Specifiers

Usd has the concept of specifiers.

The job of specifiers is mainly to define if a prim should be visible to hierarchy traversals. More info about traversals in our Layer & Stage section.

Here is an example USD ascii file with all three specifiers.

def Cube "definedCube" ()

{

double size = 2

}

over Cube "overCube" ()

{

double size = 2

}

class Cube "classCube" ()

{

double size = 2

}

This is how it affects traversal:

### High Level ###

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

# Replicate the Usd file example above:

stage.DefinePrim("/definedCube", "Cube").SetSpecifier(Sdf.SpecifierDef)

stage.DefinePrim("/overCube", "Cube").SetSpecifier(Sdf.SpecifierOver)

stage.DefinePrim("/classCube", "Cube").SetSpecifier(Sdf.SpecifierClass)

## Traverse with default filter (USD calls filter 'predicate')

# UsdPrimIsActive & UsdPrimIsDefined & UsdPrimIsLoaded & ~UsdPrimIsAbstract

for prim in stage.Traverse():

print(prim)

# Returns:

# Usd.Prim(</definedCube>)

## Traverse with 'all' filter (USD calls filter 'predicate')

for prim in stage.TraverseAll():

print(prim)

# Returns:

# Usd.Prim(</definedCube>)

# Usd.Prim(</overCube>)

# Usd.Prim(</classCube>)

## Traverse with IsAbstract (== IsClass) filter (USD calls filter 'predicate')

predicate = Usd.PrimIsAbstract

for prim in stage.Traverse(predicate):

print(prim)

# Returns:

# Usd.Prim(</classCube>)

## Traverse with ~PrimIsDefined filter (==IsNotDefined) (USD calls filter 'predicate')

predicate = ~Usd.PrimIsDefined

for prim in stage.Traverse(predicate):

print(prim)

# Returns:

# Usd.Prim(</overCube>)

Sdf.SpecifierDef: def(define)

This specifier is used to specify a prim in a hierarchy, so that is it always visible to traversals.

### High Level ###

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

# The .DefinePrim method uses a Sdf.SpecifierDef specifier by default

prim = stage.DefinePrim(prim_path, "Xform")

prim.SetSpecifier(Sdf.SpecifierDef)

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

# The .CreatePrimInLayer method uses a Sdf.SpecifierOver specifier by default

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

Sdf.SpecifierOver: over

Prims defined with over only get loaded if the prim in another layer has been specified with a defspecified. It gets used when you want to add data to an existing hierarchy, for example layering only position and normals data onto a character model, where the base model has all the static attributes like topology or UVs.

By default stage traversals will skip over over only prims. Prims that only have an over also do not get forwarded to Hydra render delegates.

### High Level ###

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

# The .DefinePrim method uses a Sdf.SpecifierDef specifier by default

prim = stage.DefinePrim(prim_path, "Xform")

prim.SetSpecifier(Sdf.SpecifierOver)

# or

prim = stage.OverridePrim(prim_path)

# The prim class' IsDefined method checks if a prim (and all its parents) have the "def" specifier.

print(prim.GetSpecifier() == Sdf.SpecifierOver, not prim.IsDefined() and not prim.IsAbstract())

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

# The .CreatePrimInLayer method uses a Sdf.SpecifierOver specifier by default

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierOver

Sdf.SpecifierClass: class

The class specifier gets used to define "template" hierarchies that can then get attached to other prims. A typical example would be to create a set of geometry render settings that then get applied to different parts of the scene by creating an inherit composition arc. This way you have a single control point if you want to adjust settings that then instantly get reflected across your whole hierarchy.

- By default stage traversals will skip over

classprims. - Usd refers to class prims as "abstract", as they never directly contribute to the hierarchy.

- We target these class prims via inherits/internal references and specialize composition arcs

### High Level ###

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

# The .DefinePrim method uses a Sdf.SpecifierDef specifier by default

prim = stage.DefinePrim(prim_path, "Xform")

prim.SetSpecifier(Sdf.SpecifierClass)

# or

prim = stage.CreateClassPrim(prim_path)

# The prim class' IsAbstract method checks if a prim (and all its parents) have the "Class" specifier.

print(prim.GetSpecifier() == Sdf.SpecifierClass, prim.IsAbstract())

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

# The .CreatePrimInLayer method uses a Sdf.SpecifierOver specifier by default

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierClass

Type Name

The type name specifies what concrete schema the prim adheres to. In plain english: Usd has the concept of schemas, which are like OOP classes. Each prim can be an instance of a class, so that it receives the default attributes of that class. More about schemas in our schemas section. You can also have prims without a type name, but in practice you shouldn't do this. For that case USD has an "empty" class that just has all the base attributes called "Scope".

### High Level ###

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

prim.SetTypeName("Xform")

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.typeName = "Xform"

# Default type without any fancy bells and whistles:

prim.SetTypeName("Scope")

prim_spec.typeName = "Scope"

Kind

The kind metadata can be attached to prims to mark them what kind hierarchy level it is. This way we can quickly traverse and select parts of the hierarchy that are of interest to us, without traversing into every child prim.

For a full explanation we have a dedicated section: Kinds

Here is the reference code on how to set kinds. For a practical example with stage traversals, check out the kinds page.

### High Level ###

from pxr import Kind, Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

model_API = Usd.ModelAPI(prim)

model_API.SetKind(Kind.Tokens.component)

# The prim class' IsModel/IsGroup method checks if a prim (and all its parents) are (sub-) kinds of model/group.

model_API.SetKind(Kind.Tokens.model)

kind = model_API.GetKind()

print(kind, (Kind.Registry.GetBaseKind(kind) or kind) == Kind.Tokens.model, prim.IsModel())

model_API.SetKind(Kind.Tokens.group)

kind = model_API.GetKind()

print(kind, (Kind.Registry.GetBaseKind(kind) or kind) == Kind.Tokens.group, prim.IsGroup())

### Low Level ###

from pxr import Kind, Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.SetInfo("kind", Kind.Tokens.component)

Active

The active metadata controls if the prim and its children are loaded or not.

We only cover here how to set the metadata, for more info checkout our Loading mechansims section. Since it is a metadata entry, it can not be animated. For animated pruning we must use visibility.

from pxr import Sdf, Usd

### High Level ###

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

prim.SetActive(False)

### Low Level ###

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/cube")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.active = False

# Or

prim_spec.SetInfo(prim_spec.ActiveKey, True)

Metadata

We go into more detail about metadata in our Metadata section.

As you can see on this page, most of the prim functionality is actually done via metadata, except path, composition and property related functions/attributes.

Tokens (Low Level API)

Prim (as well as property, attribute and relationship) specs also have the tokens they can set as their metadata as class attributes ending with 'Key'.

These 'Key' attributes are the token names that can be set on the spec via SetInfo, for example prim_spec.SetInfo(prim_spec.KindKey, "group")

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/cube")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.SetInfo(prim_spec.KindKey, "group")

Debugging (Low Level API)

You can also print a spec as its ascii representation (as it would be written to .usda files):

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/cube")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.SetInfo(prim_spec.KindKey, "group")

attr_spec = Sdf.AttributeSpec(prim_spec, "size", Sdf.ValueTypeNames.Float)

# Running this

print(prim_spec.GetAsText())

# Returns:

"""

def "cube" (

kind = "group"

)

{

float size

}

"""

Hierarchy (Parent/Child)

From any prim you can navigate to its hierarchy neighbors via the path related methods. The lower level API is dict based when accessing children, the high level API returns iterators or lists.

### High Level ###

# Has: 'IsPseudoRoot'

# Get: 'GetParent', 'GetPath', 'GetName', 'GetStage',

# 'GetChild', 'GetChildren', 'GetAllChildren',

# 'GetChildrenNames', 'GetAllChildrenNames',

# 'GetFilteredChildren', 'GetFilteredChildrenNames',

# The GetAll<MethodNames> return children that have specifiers other than Sdf.SpecifierDef

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/set/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

parent_prim = prim.GetParent()

print(prim.GetPath()) # Returns: Sdf.Path("/set/bicycle")

print(prim.GetParent()) # Returns: Usd.Prim("/set")

print(parent_prim.GetChildren()) # Returns: [Usd.Prim(</set/bicycle>)]

print(parent_prim.GetChildrenNames()) # Returns: ['bicycle']

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/set/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

print(prim_spec.path) # Returns: Sdf.Path("/set/bicycle")

print(prim_spec.name) # Returns: "bicycle"

# To rename a prim, you can simply set the name attribute to something else.

# If you want to batch-rename, you should use the Sdf.BatchNamespaceEdit class, see our explanation [here]()

prim_spec.name = "coolBicycle"

print(prim_spec.nameParent) # Returns: Sdf.PrimSpec("/set")

print(prim_spec.nameParent.nameChildren) # Returns: {'coolBicycle': Sdf.Find('anon:0x7f6e5a0e3c00:LOP:/stage/pythonscript3', '/set/coolBicycle')}

print(prim_spec.layer) # Returns: The active layer object the spec is on.

Schemas

We explain in more detail what schemas are in our schemas section. In short: They are the "base classes" of Usd. Applied schemas are schemas that don't define the prim type and instead just "apply" (provide values) for specific metadata/properties

The 'IsA' check is a very valueable check to see if something is an instance of a (base) class. It is similar to Python's isinstance method.

### High Level ###

# Has: 'IsA', 'HasAPI', 'CanApplyAPI'

# Get: 'GetTypeName', 'GetAppliedSchemas'

# Set: 'SetTypeName', 'AddAppliedSchema', 'ApplyAPI'

# Clear: 'ClearTypeName', 'RemoveAppliedSchema', 'RemoveAPI'

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

# Typed Schemas

prim.SetTypeName("Xform")

# Applied schemas

prim.AddAppliedSchema("SkelBindingAPI")

# AddAppliedSchema does not check if the schema actually exists,

# you have to use this for codeless schemas.

# prim.RemoveAppliedSchema("SkelBindingAPI")

# Single-Apply API Schemas

prim.ApplyAPI("GeomModelAPI") # Older USD versions: prim.ApplyAPI("UsdGeomModelAPI")

### Low Level ###

# To set applied API schemas via the low level API, we just

# need to set the `apiSchemas` key to a Token Listeditable Op.

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

# Typed Schemas

prim_spec.typeName = "Xform"

# Applied Schemas

schemas = Sdf.TokenListOp.Create(

prependedItems=["SkelBindingAPI", "GeomModelAPI"]

)

prim_spec.SetInfo("apiSchemas", schemas)

Prim Type Definition (High Level)

With the prim definition we can inspect what the schemas provide. Basically you are inspecting the class (as to the prim being the instance, if we compare it to OOP paradigms). In production, you won't be using this a lot, it is good to be aware of it though.

from pxr import Sdf, Tf, Usd, UsdGeom

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

prim.ApplyAPI("GeomModelAPI") # Older USD versions: prim.ApplyAPI("UsdGeomModelAPI")

prim_def = prim.GetPrimDefinition()

print(prim_def.GetAppliedAPISchemas()) # Returns: ['GeomModelAPI']

print(prim_def.GetPropertyNames())

# Returns: All properties that come from the type name schema and applied schemas

"""

['model:drawModeColor', 'model:cardTextureZPos', 'model:drawMode', 'model:cardTextureZNeg',

'model:cardTextureYPos', 'model:cardTextureYNeg', 'model:cardTextureXPos', 'model:cardTextur

eXNeg', 'model:cardGeometry', 'model:applyDrawMode', 'proxyPrim', 'visibility', 'xformOpOrde

r', 'purpose']

"""

# You can also bake down the prim definition, this won't flatten custom properties though.

dst_prim = stage.DefinePrim("/flattenedExample")

dst_prim = prim_def.FlattenTo(dst_prim)

# This will also flatten all metadata (docs etc.), this should only be used, if you need to export

# a custom schema to an external vendor. (Not sure if this the "official" way to do it, I'm sure

# there are better ones.)

Prim Type Info (High Level)

The prim type info holds the composed type info of a prim. You can think of it as as the class that answers Python type() like queries for Usd. It caches the results of type name and applied API schema names, so that prim.IsA(<typeName>) checks can be used to see if the prim matches a given type.

The prim's prim.IsA(<typeName>) checks are highly performant, you should use them as often as possible when traversing stages to filter what prims you want to edit. Doing property based queries to determine if a prim is of interest to you, is a lot slower.

from pxr import Sdf, Tf, Usd, UsdGeom

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

prim.ApplyAPI("GeomModelAPI")

print(prim.IsA(UsdGeom.Xform)) # Returns: True

print(prim.IsA(Tf.Type.FindByName('UsdGeomXform'))) # Returns: True

prim_type_info = prim.GetPrimTypeInfo()

print(prim_type_info.GetAppliedAPISchemas()) # Returns: ['GeomModelAPI']

print(prim_type_info.GetSchemaType()) # Returns: Tf.Type.FindByName('UsdGeomXform')

print(prim_type_info.GetSchemaTypeName()) # Returns: Xform

Composition

We discuss handling composition in our Composition section as it follows some different rules and is a bigger topic to tackle.

Loading Data (Purpose/Activation/Visibility)

We cover this in detail in our Loading Data section.

### High Level ###

from pxr import Sdf, Tf, Usd, UsdGeom

# Has: 'HasAuthoredActive', 'HasAuthoredHidden'

# Get: 'IsActive', 'IsLoaded', 'IsHidden'

# Set: 'SetActive', 'SetHidden'

# Clear: 'ClearActive', 'ClearHidden'

# Loading: 'Load', 'Unload'

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

## Activation: Controls subhierarchy loading of prim.

prim.SetActive(False) #

# prim.ClearActive()

## Visibility: Controls the visiblity for render delegates (subhierarchy will still be loaded)

imageable_API = UsdGeom.Imageable(prim)

visibility_attr = imageable_API.CreateVisibilityAttr()

visibility_attr.Set(UsdGeom.Tokens.invisible)

## Purpose: Controls if the prim is visible for what the renderer requested.

imageable_API = UsdGeom.Imageable(prim)

purpose_attr = imageable_API.CreatePurposeAttr()

purpose_attr.Set(UsdGeom.Tokens.render)

## Payload loading: Control payload loading (High Level only as it redirects the request to the stage).

# In our example stage here, we have no payloads, so we don't see a difference.

prim.Load()

prim.Unload()

# Calling this on the prim is the same thing.

prim = stage.GetPrimAtPath(prim_path)

prim.GetStage().Load(prim_path)

prim.GetStage().Unload(prim_path)

## Hidden: # Hint to hide for UIs

prim.SetHidden(False)

# prim.ClearHidden()

### Low Level ###

from pxr import Sdf, UsdGeom

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/set/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

## Activation: Controls subhierarchy loading of prim.

prim_spec.active = False

# prim_spec.ClearActive()

## Visibility: Controls the visiblity for render delegates (subhierarchy will still be loaded)

visibility_attr_spec = Sdf.AttributeSpec(prim_spec, UsdGeom.Tokens.visibility, Sdf.ValueTypeNames.Token)

visibility_attr_spec.default = UsdGeom.Tokens.invisible

## Purpose: Controls if the prim is visible for what the renderer requested.

purpose_attr_spec = Sdf.AttributeSpec(prim_spec, UsdGeom.Tokens.purpose, Sdf.ValueTypeNames.Token)

purpose_attr_spec.default = UsdGeom.Tokens.render

## Hidden: # Hint to hide for UIs

prim_spec.hidden = True

# prim_spec.ClearHidden()

Properties/Attributes/Relationships

We cover properties in more detail in our properties section.

Technically properties are also stored as metadata on the Sdf.PrimSpec. So later on when we look at composition, keep in mind that the prim stack therefore also drives the property stack. That's why the prim index is on the prim level and not on the property level.

...

print(prim_spec.properties, prim_spec.attributes, prim_spec.relationships)

print(prim_spec.GetInfo("properties"))

...

Here are the basics for both API levels:

High Level API

from pxr import Usd, Sdf

# Has: 'HasProperty', 'HasAttribute', 'HasRelationship'

# Get: 'GetProperties', 'GetAuthoredProperties', 'GetPropertyNames', 'GetPropertiesInNamespace', 'GetAuthoredPropertiesInNamespace'

# 'GetAttribute', 'GetAttributes', 'GetAuthoredAttributes'

# 'GetRelationship', 'GetRelationships', 'GetAuthoredRelationships'

# 'FindAllAttributeConnectionPaths', 'FindAllRelationshipTargetPaths'

# Set: 'CreateAttribute', 'CreateRelationship'

# Clear: 'RemoveProperty',

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Cube")

# As the cube schema ships with a "size" attribute, we don't have to create it first

# Usd is smart enough to check the schema for the type and creates it for us.

size_attr = prim.GetAttribute("size")

size_attr.Set(10)

## Looking up attributes

print(prim.GetAttributes())

# Returns: All the attributes that are provided by the schema

"""

[Usd.Prim(</bicycle>).GetAttribute('doubleSided'), Usd.Prim(</bicycle>).GetAttribute('extent'), Usd.

Prim(</bicycle>).GetAttribute('orientation'), Usd.Prim(</bicycle>).GetAttribute('primvars:displayCol

or'), Usd.Prim(</bicycle>).GetAttribute('primvars:displayOpacity'), Usd.Prim(</bicycle>).GetAttribut

e('purpose'), Usd.Prim(</bicycle>).GetAttribute('size'), Usd.Prim(</bicycle>).GetAttribute('visibili

ty'), Usd.Prim(</bicycle>).GetAttribute('xformOpOrder')]

"""

print(prim.GetAuthoredAttributes())

# Returns: Only the attributes we have written to in the active stage.

# [Usd.Prim(</bicycle>).GetAttribute('size')]

## Looking up relationships:

print(prim.GetRelationships())

# Returns:

# [Usd.Prim(</bicycle>).GetRelationship('proxyPrim')]

box_prim = stage.DefinePrim("/box")

prim.GetRelationship("proxyPrim").SetTargets([box_prim.GetPath()])

# If we now check our properties, you can see both the size attribute

# and proxyPrim relationship show up.

print(prim.GetAuthoredProperties())

# Returns:

# [Usd.Prim(</bicycle>).GetRelationship('proxyPrim'),

# Usd.Prim(</bicycle>).GetAttribute('size')]

## Creating attributes:

# If we want to create non-schema attributes (or even schema attributes without using

# the schema getter/setters), we can run:

tire_size_attr = prim.CreateAttribute("tire:size", Sdf.ValueTypeNames.Float)

tire_size_attr.Set(5)

Low Level API

To access properties on Sdf.PrimSpecs we can call the properties, attributes, relationships methods. These return a dict with the {'name': spec} data.

Here is an example of what is returned when you create cube with a size attribute:

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/cube")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

attr_spec = Sdf.AttributeSpec(prim_spec, "size", Sdf.ValueTypeNames.Float)

print(prim_spec.attributes) # Returns: {'size': Sdf.Find('anon:0x7f6efe199480:LOP:/stage/python', '/cube.size')}

attr_spec.default = 10

# To remove a property you can run:

# prim_spec.RemoveProperty(attr_spec)

# Let's re-create what we did in the high level API example.

box_prim_path = Sdf.Path("/box")

box_prim_spec = Sdf.CreatePrimInLayer(layer, box_prim_path)

box_prim_spec.specifier = Sdf.SpecifierDef

rel_spec = Sdf.RelationshipSpec(prim_spec, "proxyPrim")

rel_spec.targetPathList.explicitItems = [box_prim_path]

# Get all authored properties (in the active layer only)

print(prim_spec.properties)

# Returns:

"""

{'size': Sdf.Find('anon:0x7ff87c9c2000', '/cube.size'),

'proxyPrim': Sdf.Find('anon:0x7ff87c9c2000', '/cube.proxyPrim')}

"""

Since the lower level API doesn't see the schema properties, these commands will only return what is actually in the layer, in Usd speak authored.

With the high level API you can get the same/similar result by calling prim.GetAuthoredAttributes() as you can see above. When you have multiple layers, the prim.GetAuthoredAttributes(), will give you the created attributes from all layers, where as the low level API only the ones from the active layer.

As mentioned in the properties section, properties is the base class, so the properties method will give you

the merged dict of the attributes and relationship dicts.

Properties

For an overview and summary please see the parent Data Containers section.

Here is an overview of the API structure, in the high level API it looks as follows:

flowchart TD

property([Usd.Property])

property --> attribute([Usd.Attribute])

property --> relationship([Usd.Relationship])

In the low level API:

flowchart TD

property([Sdf.PropertySpec])

property --> attribute([Sdf.AttributeSpec])

property --> relationship([Sdf.RelationshipSpec])

Table of Contents

- Properties

- Attributes

- Relationships

- Schemas

Resources

- Usd.Property

- Usd.Attribute

- Usd.Relationship

- Usd.GeomPrimvar

- Usd.GeomPrimvarsAPI

- Usd.GeomImageable

- Usd.GeomBoundable

Properties

Let's first have a look at the shared base class Usd.Property. This inherits most its functionality from Usd.Object, which mainly exposes metadata data editing. We won't cover how metadata editing works for properties here, as it is extensively covered in our metadata section.

So let's inspect what else the class offers:

# Methods & Attributes of interest:

# 'IsDefined', 'IsAuthored'

# 'FlattenTo'

# 'GetPropertyStack'

from pxr import Usd, Sdf

### High Level ###

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Cube")

# Check if the attribute defined

attr = prim.CreateAttribute("height", Sdf.ValueTypeNames.Double)

print(attr.IsDefined()) # Returns: True

attr = prim.GetAttribute("someRandomName")

print(attr.IsDefined())

if not attr:

prim.CreateAttribute("someRandomName", Sdf.ValueTypeNames.String)

# Check if the attribute has any written values in any layer

print(attr.IsAuthored()) # Returns: True

attr.Set("debugString")

# Flatten the attribute to another prim (with optionally a different name)

# This is quite usefull when you need to copy a specific attribute only instead

# of a certain prim.

prim_path = Sdf.Path("/box")

prim = stage.DefinePrim(prim_path, "Cube")

attr.FlattenTo(prim, "someNewName")

# Inspect the property value source layer stack.

# Note the method signature takes a time code as an input. If you supply a default time code

# value clips will be stripped from the result.

time_code = Usd.TimeCode(1001)

print(attr.GetPropertyStack(time_code))

### Low Level ###

# The low level API does not offer any "extras" worthy of noting.

As you can see, the .GetProperty/.GetAttribute/.GetRelationship methods return an object instead of just returning None. This way we can still check for .IsDefined(). We can also use them as "truthy"/"falsy" objects, e.g. if not attr which makes it nicely readable.

For a practical of the .GetPropertyStack() method see our Houdini section, where we use it to debug if time varying data actually exists. We also cover it in more detail in our composition section.

Attributes

Attributes in USD are the main data containers to hold all of you geometry related data. They are the only element in USD that can be animateable.

Attribute Types (Detail/Prim/Vertex/Point) (USD Speak: Interpolation)

To determine on what geo prim element an attribute applies to, attributes are marked with interpolation metadata.

We'll use Houdini's naming conventions as a frame of reference here:

You can read up more info in the Usd.GeomPrimvar docs page.

UsdGeom.Tokens.constant(Same as Houdini'sdetailattributes): Global attributes (per prim in the hierarchy).UsdGeom.Tokens.uniform(Same as Houdini'sprimattributes): Per prim attributes (e.g. groups of polygons).UsdGeom.Tokens.faceVarying(Same as Houdini'svertexattributes): Per vertex attributes (e.g. UVs).UsdGeom.Tokens.varying(Same as Houdini'svertexattributes): This the same as face varying, except for nurbs surfaces.UsdGeom.Tokens.vertex(Same as Houdini'spointattributes): Per point attributes (e.g. point positions).

To summarize:

| Usd Name | Houdini Name |

|---|---|

| UsdGeom.Tokens.constant | detail |

| UsdGeom.Tokens.uniform | prim |

| UsdGeom.Tokens.faceVarying | vertex |

| UsdGeom.Tokens.vertex | point |

from pxr import Sdf, Usd, UsdGeom

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

attr = prim.CreateAttribute("tire:size", Sdf.ValueTypeNames.Float)

attr.Set(10)

attr.SetMetadata("interpolation", UsdGeom.Tokens.constant)

### Low Level ###

from pxr import Sdf, UsdGeom

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Xform"

attr_spec = Sdf.AttributeSpec(prim_spec, "tire:size", Sdf.ValueTypeNames.Double)

attr_spec.default = 10

attr_spec.interpolation = UsdGeom.Tokens.constant

# Or

attr_spec.SetInfo("interpolation", UsdGeom.Tokens.constant)

For attributes that don't need to be accessed by Hydra (USD's render abstraction interface), we don't need to set the interpolation. In order for an attribute, that does not derive from a schema, to be accessible for the Hydra, we need to namespace it with primvars:, more info below at primvars. If the attribute element count for non detail (constant) attributes doesn't match the corresponding prim/vertex/point count, it will be ignored by the renderer (or crash it).

When we set schema attributes, we don't need to set the interpolation, as it is provided from the schema.

Attribute Data Types & Roles

We cover how to work with data classes in detail in our data types/roles section. For array attributes, USD has implemented the buffer protocol, so we can easily convert from numpy arrays to USD Vt arrays and vice versa. This allows us to write high performance attribute modifications directly in Python. See our Houdini Particles section for a practical example.

from pxr import Gf, Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Xform")

# When we create attributes, we have to specify the data type/role via a Sdf.ValueTypeName

attr = prim.CreateAttribute("tire:size", Sdf.ValueTypeNames.Float)

# We can then set the attribute to a value of that type.

# Python handles the casting to the correct precision automatically for base data types.

attr.Set(10)

# For attributes the `typeName` metadata specifies the data type/role.

print(attr.GetTypeName()) # Returns: Sdf.ValueTypeNames.Float

# Non-base data types

attr = prim.CreateAttribute("someArray", Sdf.ValueTypeNames.Half3Array)

attr.Set([Gf.Vec3h()] * 3)

attr = prim.CreateAttribute("someAssetPathArray", Sdf.ValueTypeNames.AssetArray)

attr.Set(Sdf.AssetPathArray(["testA.usd", "testB.usd"]))

### Low Level ###

from pxr import Gf, Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Xform"

attr_spec = Sdf.AttributeSpec(prim_spec, "tire:size", Sdf.ValueTypeNames.Double)

# We can then set the attribute to a value of that type.

# Python handles the casting to the correct precision automatically for base data types.

attr_spec.default = 10

# For attributes the `typeName` metadata specifies the data type/role.

print(attr_spec.typeName) # Returns: Sdf.ValueTypeNames.Float

# Non-base data types

attr_spec = Sdf.AttributeSpec(prim_spec, "someArray", Sdf.ValueTypeNames.Half3Array)

attr_spec.default = ([Gf.Vec3h()] * 3)

attr_spec = Sdf.AttributeSpec(prim_spec, "someAssetPathArray", Sdf.ValueTypeNames.AssetArray)

attr_spec.default = Sdf.AssetPathArray(["testA.usd", "testB.usd"])

# Creating an attribute spec with the same data type as an existing attribute (spec)

# is as easy as passing in the type name from the existing attribute (spec)

same_type_attr_spec = Sdf.AttributeSpec(prim_spec, "tire:radius", attr.GetTypeName())

# Or

same_type_attr_spec = Sdf.AttributeSpec(prim_spec, "tire:radius", attr_spec.typeName)

The role specifies the intent of the data, e.g. points, normals, color and will affect how renderers/DCCs handle the attribute. This is not a concept only for USD, it is there in all DCCs. For example a color vector doesn't need to be influenced by transform operations where as normals and points do.

Here is a comparison to when we create an attribute a float3 normal attribute in Houdini.

Static (Default) Values vs Time Samples vs Value Blocking

We talk about how animation works in our animation section.

from pxr import Sdf, Usd

### High Level ###

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Cube")

size_attr = prim.GetAttribute("size")

for frame in range(1001, 1005):

time_code = Usd.TimeCode(float(frame - 1001))

# .Set() takes args in the .Set(<value>, <frame>) format

size_attr.Set(frame, time_code)

print(size_attr.Get(1005)) # Returns: 4

### Low Level ###

from pxr import Sdf

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Cube"

attr_spec = Sdf.AttributeSpec(prim_spec, "size", Sdf.ValueTypeNames.Double)

for frame in range(1001, 1005):

value = float(frame - 1001)

# .SetTimeSample() takes args in the .SetTimeSample(<path>, <frame>, <value>) format

layer.SetTimeSample(attr_spec.path, frame, value)

print(layer.QueryTimeSample(attr_spec.path, 1005)) # Returns: 4

We can set an attribute with a static value (USD speak default) or with time samples (or both, checkout the animation section on how to handle this edge case). We can also block it, so that USD sees it as if no value was written. For attributes from schemas with default values, this will make it fallback to the default value.

from pxr import Sdf, Usd

stage = Usd.Stage.CreateInMemory()

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path, "Cube")

size_attr = prim.GetAttribute("size")

## Set default value

time_code = Usd.TimeCode.Default()

size_attr.Set(10, time_code)

# Or:

size_attr.Set(10) # The default is to set `default` (non-per-frame) data.

## Set per frame value

for frame in range(1001, 1005):

time_code = Usd.TimeCode(frame)

size_attr.Set(frame, time_code)

# Or

# As with Sdf.Path implicit casting from strings in a lot of places in the USD API,

# the time code is implicitly casted from a Python float.

# It is recommended to do the above, to be more future proof of

# potentially encoding time unit based samples.

for frame in range(1001, 1005):

size_attr.Set(frame, frame)

## Block the value. This makes the attribute look to USD as if no value was written.

# For attributes from schemas with default values, this will make it fallback to the default value.

height_attr = prim.CreateAttribute("height", Sdf.ValueTypeNames.Float)

height_attr.Set(Sdf.ValueBlock())

For more examples (also for the lower level API) check out the animation section.

Re-writing a range of values from a different layer

An important thing to note is that when we want to re-write the data of an attribute from a different layer, we have to get all the existing data first and then write the data, as otherwise we are changing the value source. To understand better why this happens, check out our composition section.

Let's demonstrate this:

Change existing values | Click to expand code

from pxr import Sdf, Usd

# Spawn reference data

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Cube"

attr_spec = Sdf.AttributeSpec(prim_spec, "size", Sdf.ValueTypeNames.Double)

for frame in range(1001, 1010):

value = float(frame - 1001)

layer.SetTimeSample(attr_spec.path, frame, value)

# Reference data

stage = Usd.Stage.CreateInMemory()

ref = Sdf.Reference(layer.identifier, "/bicycle")

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path)

ref_api = prim.GetReferences()

ref_api.AddReference(ref)

# Now if we try to read and write the data at the same time,

# we overwrite the (layer composition) value source. In non USD speak:

# We change the layer the data is coming from. Therefore we won't see

# the original data after setting the first time sample.

size_attr = prim.GetAttribute("size")

for time_sample in size_attr.GetTimeSamples():

size_attr_value = size_attr.Get(time_sample)

print(time_sample, size_attr_value)

size_attr.Set(size_attr_value, time_sample)

# Prints:

"""

1001.0 0.0

1002.0 0.0

1003.0 0.0

1004.0 0.0

1005.0 0.0

1006.0 0.0

1007.0 0.0

1008.0 0.0

1009.0 0.0

"""

# Let's undo the previous edit.

prim.RemoveProperty("size") # Removes the local layer attribute spec

# Collect data first ()

data = {}

size_attr = prim.GetAttribute("size")

for time_sample in size_attr.GetTimeSamples():

size_attr_value = size_attr.Get(time_sample)

print(time_sample, size_attr_value)

data[time_sample] = size_attr_value

# Prints:

"""

1001.0 0.0

1002.0 1.0

1003.0 2.0

1004.0 3.0

1005.0 4.0

1006.0 5.0

1007.0 6.0

1008.0 7.0

1009.0 8.0

"""

# Then write it

for time_sample, value in data.items():

size_attr_value = size_attr.Get(time_sample)

size_attr.Set(value + 10, time_sample)

For heavy data it would be impossible to load everything into memory to offset it. USD's solution for that problem is Layer Offsets.

What if we don't want to offset the values, but instead edit them like in the example above?

In a production pipeline you usually do this via a DCC that imports the data, edits it and then re-exports it (often per frame and loads it via value clips). So we mitigate the problem by distributing the write to a new file(s) on multiple machines/app instances. Sometimes though we actually have to edit the samples in an existing file, for example when post processing data. In our point instancer section we showcase a practical example of when this is needed.

To edit the time samples directly, we can open the layer as a stage or edit the layer directly. To find the layers you can inspect the layer stack or value clips, but most of the time you know the layers, as you just wrote to them:

from pxr import Sdf, Usd

# Spawn example data, this would be a file on disk

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Cube"

attr_spec = Sdf.AttributeSpec(prim_spec, "size", Sdf.ValueTypeNames.Double)

for frame in range(1001, 1010):

value = float(frame - 1001)

layer.SetTimeSample(attr_spec.path, frame, value)

# Edit content

layer_identifiers = [layer.identifier]

for layer_identifier in layer_identifiers:

prim_path = Sdf.Path("/bicycle")

### High Level ###

stage = Usd.Stage.Open(layer_identifier)

prim = stage.GetPrimAtPath(prim_path)

size_attr = prim.GetAttribute("size")

for frame in size_attr.GetTimeSamples():

size_attr_value = size_attr.Get(frame)

# .Set() takes args in the .Set(<value>, <frame>) format

size_attr.Set(size_attr_value + 125, frame)

### Low Level ###

# Note that this edits the same layer as the stage above.

layer = Sdf.Layer.FindOrOpen(layer_identifier)

prim_spec = layer.GetPrimAtPath(prim_path)

attr_spec = prim_spec.attributes["size"]

for frame in layer.ListTimeSamplesForPath(attr_spec.path):

value = layer.QueryTimeSample(attr_spec.path, frame)

layer.SetTimeSample(attr_spec.path, frame, value + 125)

Time freezing (mesh) data

If we want to time freeze a prim (where the data comes from composed layers), we simply re-write a specific time sample to the default value.

Pro Tip | Time Freeze | Click to expand code

from pxr import Sdf, Usd

# Spawn example data, this would be a file on disk

layer = Sdf.Layer.CreateAnonymous()

prim_path = Sdf.Path("/bicycle")

prim_spec = Sdf.CreatePrimInLayer(layer, prim_path)

prim_spec.specifier = Sdf.SpecifierDef

prim_spec.typeName = "Cube"

attr_spec = Sdf.AttributeSpec(prim_spec, "size", Sdf.ValueTypeNames.Double)

for frame in range(1001, 1010):

value = float(frame - 1001)

layer.SetTimeSample(attr_spec.path, frame, value)

# Reference data

stage = Usd.Stage.CreateInMemory()

ref = Sdf.Reference(layer.identifier, "/bicycle")

prim_path = Sdf.Path("/bicycle")

prim = stage.DefinePrim(prim_path)

ref_api = prim.GetReferences()

ref_api.AddReference(ref)

# Freeze content

freeze_frame = 1001

attrs = []

for prim in stage.Traverse():

### High Level ###

for attr in prim.GetAuthoredAttributes():

# attr.Set(attr.Get(freeze_frame))

### Low Level ###

attrs.append(attr)

### Low Level ###

active_layer = stage.GetEditTarget().GetLayer()

with Sdf.ChangeBlock():

for attr in attrs:

attr_spec = active_layer.GetAttributeAtPath(attr.GetPath())

if not attr_spec:

prim_path = attr.GetPrim().GetPath()

prim_spec = active_layer.GetPrimAtPath(prim_path)

if not prim_spec:

prim_spec = Sdf.CreatePrimInLayer(active_layer, prim_path)

attr_spec = Sdf.AttributeSpec(prim_spec, attr.GetName(),attr.GetTypeName())

attr_spec.default = attr.Get(freeze_frame)

If you have to do this for a whole hierarchy/scene, this does mean that you are flattening everything into your memory, so be aware! USD currently offers no other mechanism.

We'll leave "Time freezing" data from the active layer to you as an exercise.

Hint | Time Freeze | Active Layer | Click to expand

We just need to write the time sample of your choice to the attr_spec.default attribute and clear the time samples ;

Attribute To Attribute Connections (Node Graph Encoding)

Attributes can also encode relationship-like paths to other attributes. These connections are encoded directly on the attribute. It is up to Usd/Hydra to evaluate these "attribute graphs", if you simply connect two attributes, it will not forward attribute value A to connected attribute B (USD does not have a concept for a mechanism like that (yet)).

Attribute connections are encoded from target attribute to source attribute.

The USD file syntax is: <data type> <attribute name>.connect = </path/to/other/prim.<attribute name>

Currently the main use of connections is encoding node graphs for shaders via the UsdShade.ConnectableAPI.

Here is an example of how a material network is encoded.

def Scope "materials"

{

def Material "karmamtlxsubnet" (

)

{

token outputs:mtlx:surface.connect = </materials/karmamtlxsubnet/mtlxsurface.outputs:out>

def Shader "mtlxsurface" ()

{

uniform token info:id = "ND_surface"

string inputs:edf.connect = </materials/karmamtlxsubnet/mtlxuniform_edf.outputs:out>

token outputs:out

}

def Shader "mtlxuniform_edf"

{

uniform token info:id = "ND_uniform_edf"

color3f inputs:color.connect = </materials/karmamtlxsubnet/mtlx_constant.outputs:out>

token outputs:out

}

def Shader "mtlx_constant"

{

uniform token info:id = "ND_constant_float"

float outputs:out

}

}

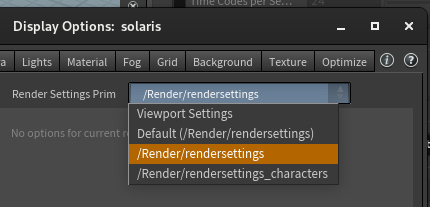

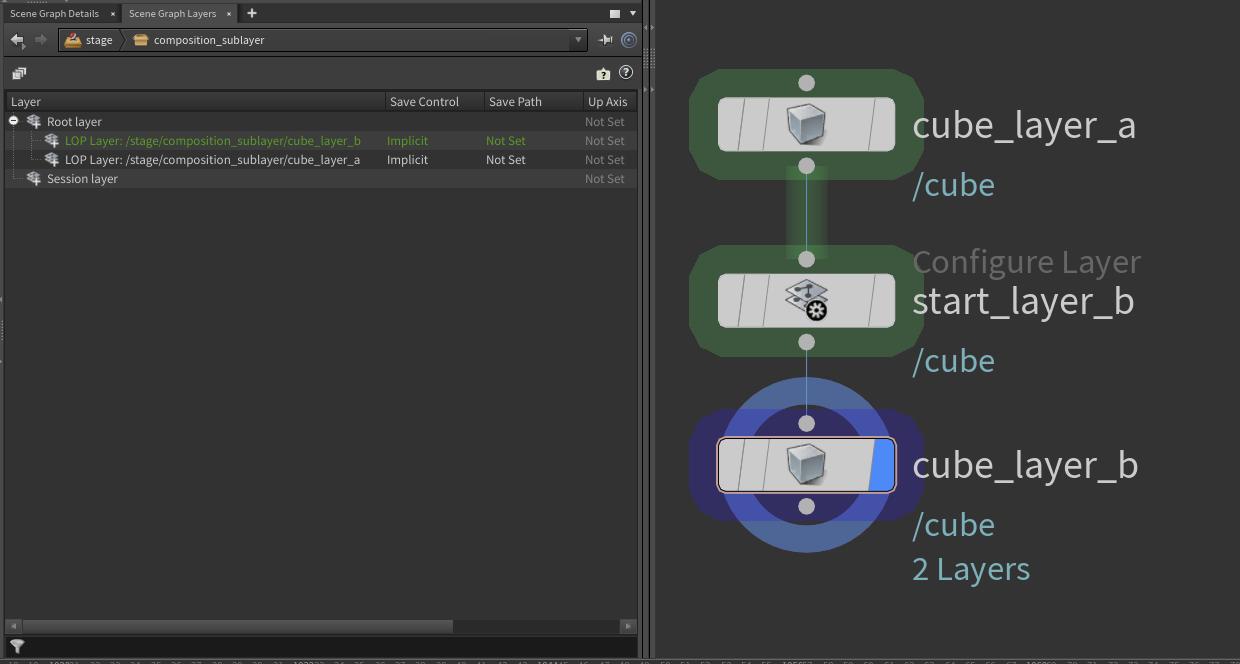

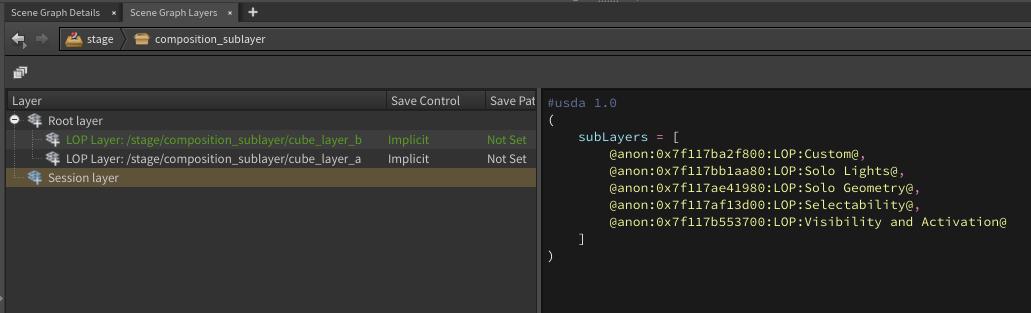

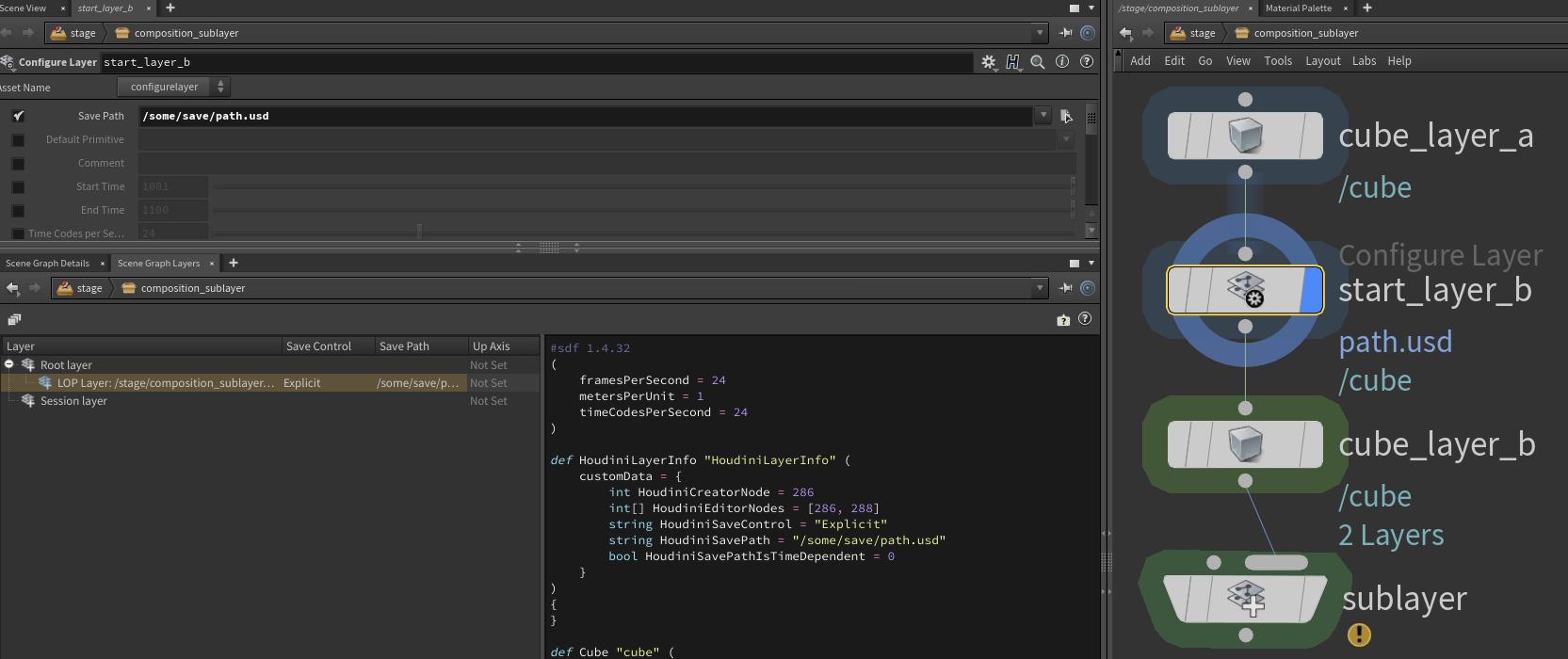

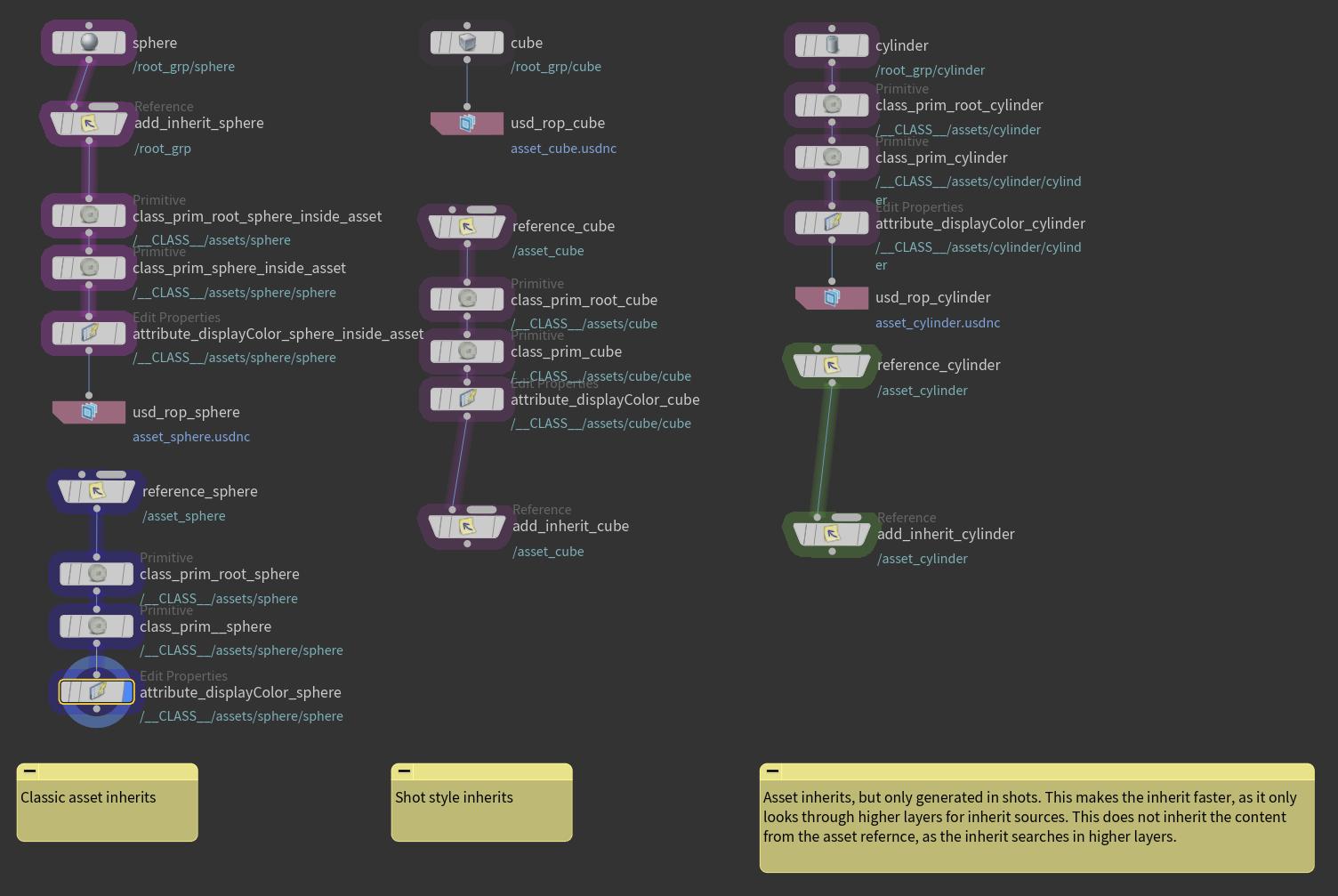

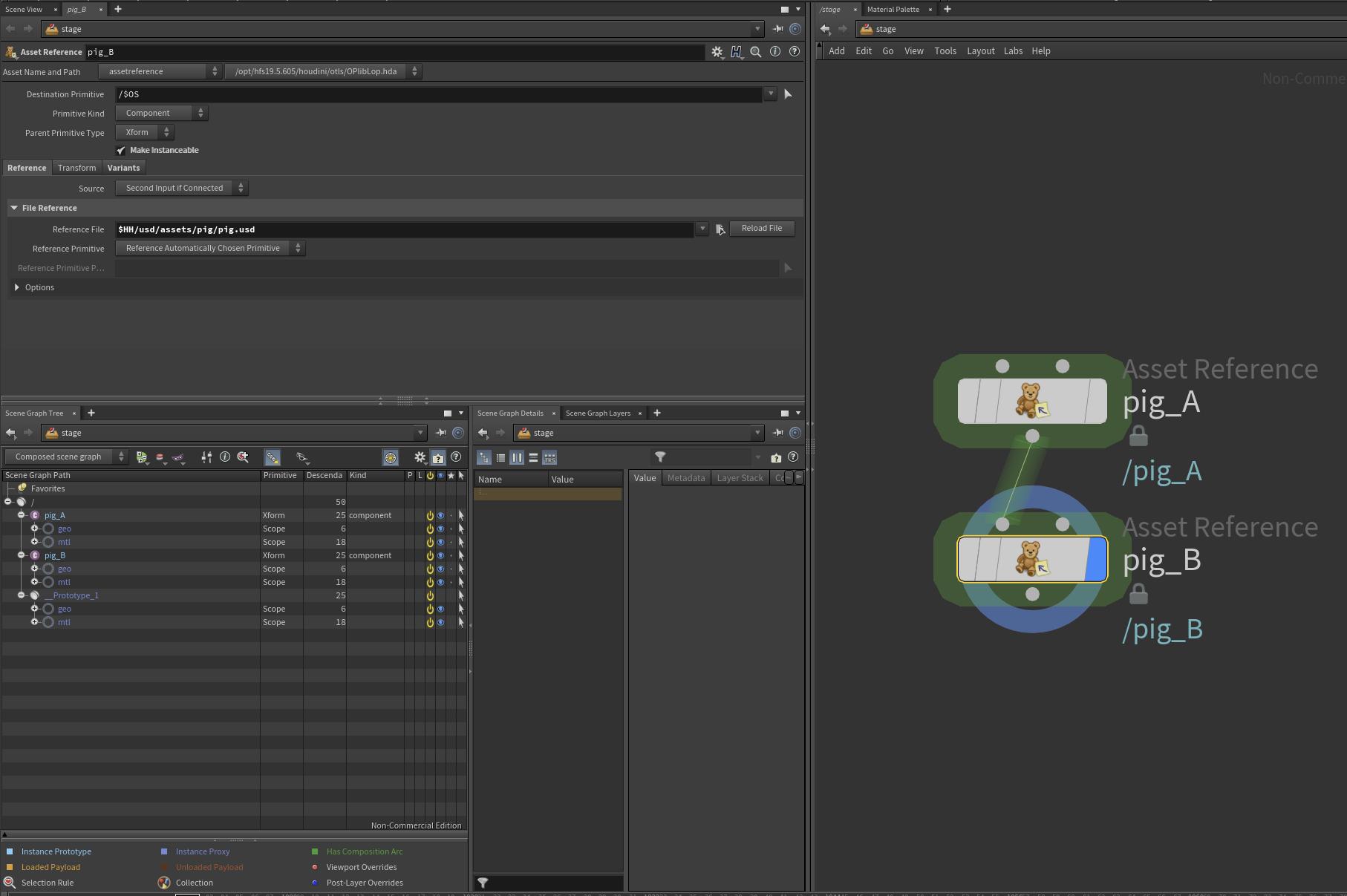

}